The robot.txt is used to enter URL’s of the website for crawling, the Disallow notifies the bots not to crawl all the web pages in the website. it is useful for the pages no need for search traffic or no use of readers by this Google won’t waste their time in crawling these pages

Step-1

In the home page of check SEO tool, a search bar with a review button will be present for the report of On-Page SEO, get enter your URL in the search box for reviewing your website

Step-2

A page with score will appear on the screen, for the improvement of your on-page this tool is providing you complete report with all required SEO factors

Step-3

In the website all the pages need to crawl by Google for indexing your website, all the URL’s are stored or entered at one place in the XML sitemap. If any web pages are not in use for the readers or no need for search traffic we can place them in robot.txt file. If your website has no disallowed file the report will be shown in the above image

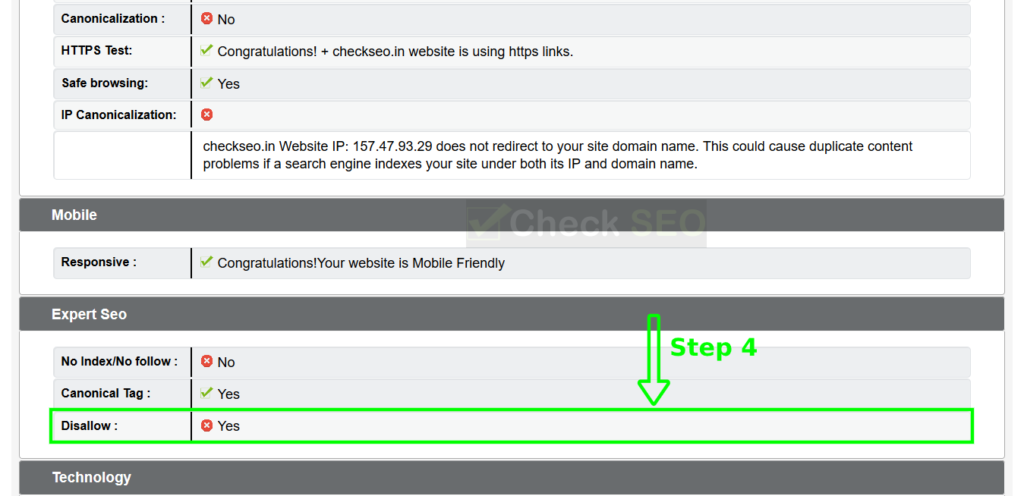

Step-4

Website URLs will be crawl by the Google bots for indexing the site, in some cases, web pages may not be useful for the user’s or visitors, disallow prevents the webpage URL’s from indexing by the bots. In your website, if you have used disallow robot.txt a report will be displayed given image below